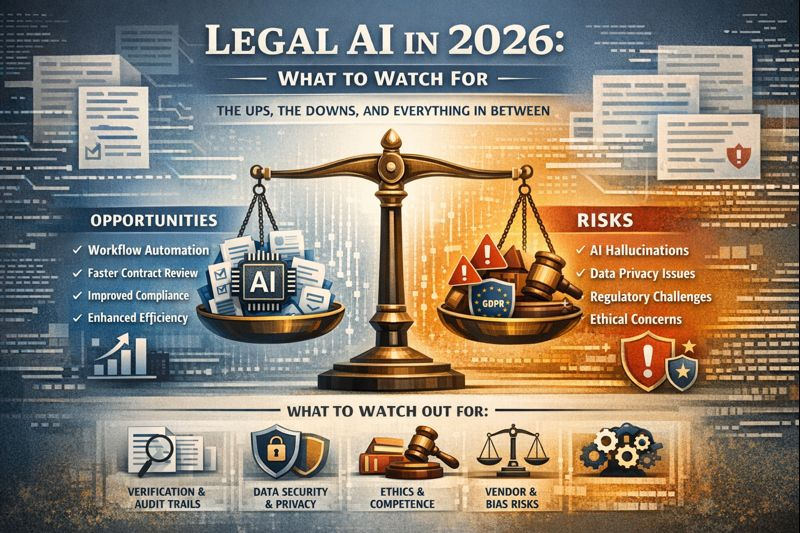

2026 is shaping up to be the year legal AI becomes less of a “tool experiment” and more of an operational system—embedded into how contracts are drafted, reviewed, negotiated, stored, and governed. The upside is real: faster cycle times, better visibility into risk, and more consistent outputs across teams. The downside is also real: regulatory pressure, confidentiality landmines, hallucination-driven errors, and vendor ecosystems that can outpace a legal team’s ability to evaluate what’s safe to deploy.

Below is a practical guide to what to watch out for in 2026—written for in-house teams, law firms, founders, operators, and anyone adopting legal AI in real workflows.

1) The biggest shift: legal AI moves from “chat” to “workflow”

In 2026, the most valuable legal AI won’t look like a standalone chatbot. It will look like structured workflows: intake → document upload → clause extraction → risk scoring → redlines → approvals → secure storage → audit trail. This shift matters because the real legal risk rarely comes from a single answer; it comes from how that answer travels through your organization—who sees it, who edits it, what data it touches, and whether anyone can prove what happened later.

This is also why governance is becoming inseparable from product. Frameworks like NIST’s AI Risk Management Framework (AI RMF) emphasize managing AI risk across the system lifecycle—not just checking outputs at the end.

What to do in 2026: prioritize tools that support structured review steps, role-based access, and auditable logging—especially for contract workflows.

2) Hallucinations are still here—courts are treating them as professional failures

Hallucinations (fabricated citations, incorrect case summaries, made-up “facts”) remain one of the most visible legal AI failure modes. The legal industry has already seen sanctions and fines tied to AI-generated filings, and mainstream coverage in 2025 highlighted how persistent this problem is when lawyers treat generative tools like authoritative databases.

In 2026, what changes isn’t that hallucinations disappear—it’s that tolerance for “AI made me do it” continues to drop. Bar guidance and judicial expectations increasingly treat verification as a baseline duty.

What to do in 2026: implement “verification by design.” For research and citations, require source links, require human review, and prefer retrieval-grounded systems that show where an answer came from (and what it could not confirm).

3) Ethical duties are clearer: competence + confidentiality + communication

One of the most important developments for legal AI adoption is that professional guidance is no longer vague. The American Bar Association issued formal ethics guidance on lawyers’ use of generative AI, tying obligations to core duties like competence and confidentiality, and emphasizing that lawyers must understand the tools well enough to use them responsibly.

For California practitioners and teams working with California counsel, additional discussion and guidance has been circulated around the same themes: lawyers remain responsible for outputs, must protect client data, and must manage the novel risks of generative systems.

What to do in 2026: treat AI literacy as mandatory training—not optional. Your team should know what data is being shared, what is stored, what can be reproduced, and where human review is required.

4) Regulation pressure increases—especially for organizations touching the EU

Even if you’re US-based, 2026 is a major compliance year if you serve EU customers, process EU data, or deploy AI features into products used in the EU. The EU AI Act rollout includes staged obligations, with major requirements for certain systems scheduled to apply from August 2, 2026 (per widely cited legal and regulatory timelines).

This matters for legal AI because contract review, employment-related analysis, and compliance tooling can drift toward regulated territory depending on use case, customer type, and the degree of automation.

What to do in 2026: map your legal AI use cases to risk categories early. Ask vendors for documentation, controls, and clarity on how they support compliance obligations—before procurement, not after rollout.

5) Data privacy and confidentiality will be the “silent dealbreaker”

Legal work is confidentiality-heavy by nature. The risk in 2026 isn’t just “did the model get the clause wrong?” It’s “did we expose privileged, sensitive, or regulated data in ways we can’t unwind?”

Common failure patterns include:

- Teams pasting sensitive terms into consumer AI tools without understanding retention or training policies

- Vendors subcontracting processing to third parties without clear controls

- Lack of clear deletion, auditability, or access controls

- Prompt and file leakage through integrations and plugins

Ethics guidance repeatedly emphasizes confidentiality duties, and the enforcement trend across jurisdictions is moving in the same direction: organizations are expected to know how tools handle data.

What to do in 2026: require clear answers to: Where is data processed? Is it retained? Is it used for training? Who can access it? What logs exist? How fast can we delete it?

6) “Accuracy” won’t be enough—teams will demand explainability and audit trails

In 2025, many organizations were satisfied with “pretty good” outputs plus human review. In 2026, that posture matures: legal teams increasingly want traceability—what sources were used, what assumptions were made, what changed between versions, and who approved it.

That’s why governance frameworks like NIST’s GenAI profile focus heavily on measurement, monitoring, and documentation across AI system operation—not just output correctness.

What to do in 2026: look for systems that can produce defensible audit trails (especially for regulated industries, procurement, and enterprise customers).

7) Bias, quality, and “model drift” show up in subtle contract work

Bias in legal AI isn’t only about demographics. In contract workflows, bias can look like:

- Risk scoring that consistently over-flags certain clause patterns without context

- Negotiation suggestions that reflect a specific jurisdiction or industry norm inappropriately

- Summary outputs that omit “unfavorable” sections due to model behavior or prompt patterns

- Drift over time as models update and outputs change, silently affecting consistency

Industry guidance increasingly lists bias and output quality as core legal AI risks that practitioners must manage.

What to do in 2026: establish evaluation benchmarks. Track performance on your own document sets (NDAs, MSAs, SOWs) and re-test after model updates or configuration changes.

8) Vendor risk gets more serious: “AI inside” isn’t a security posture

In 2026, many legal AI products will compete on packaging—agents, copilots, add-ons, integrations—without meaningful transparency on what’s happening behind the scenes. Some tools will be excellent. Others will be risky wrappers around generic models with limited controls.

What to do in 2026: treat legal AI like any high-impact vendor:

- Demand clear security documentation and data handling terms

- Confirm whether inputs are used for training

- Require role-based access, audit logs, and configurable retention

- Validate how the tool performs on your contract types

- Ensure the product supports human-in-the-loop review (not just “approve and send”)

The upside: 2026 can be the year legal work becomes faster and more trustworthy

Despite the risks, 2026 is full of upside if adoption is done correctly. Done well, legal AI reduces repetitive drafting, accelerates review cycles, and makes risk visible earlier—before a bad clause becomes a costly dispute. The organizations that win won’t be the ones who “use AI the most.” They’ll be the ones who use it with the right controls: grounded outputs, privacy-first handling, clear review steps, and provable audit trails.

Where Legal Chain fits

At Legal Chain, we believe legal AI in 2026 must be built for trust—not just speed. That means AI that supports real contract workflows, human validation, and security-first handling designed for sensitive documents.

If your 2026 goal is to move faster without sacrificing defensibility, this is the year to upgrade from “AI experiments” to governed legal intelligence.

Want to see what that looks like in practice? Join the Legal Chain beta and help shape the next standard for secure, auditable legal AI.